I appreciate killing two birds with one stone. I’m all about efficiency and if I can satisfy more than one task with a simple, productive process, then I’m going to do it. Today, I’m about to:

- Show you why you should have a backup copy of previous agent software and how to do this.

- Create a documented process to restore previous images of an agent to a target host.

- Create the content section for the Collaborate HOL on Gold Images and make it reproducible.

- Create a customer demonstration of Gold Agent Image

- AND publish a blog post on how to do it all.

![igotthis]()

I have a pristine Enterprise Manager 13c environment that I’m working in. To “pollute” it with a 12.1.0.5 or earlier agent seems against what anyone would want to do in a real world EM, but there may very well reasons for having to do so:

- A plugin or bug in the EM13c agent requires a previous agent version to be deployed.

- A customer wants to see a demo of the EM13c gold agent image and this would require a host being monitored by an older, 12c agent.

Retaining Previous Agent Copies

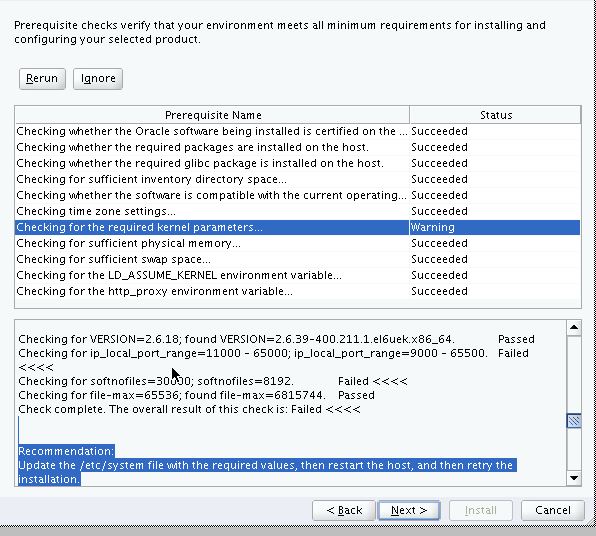

It would appear to be a simple process. Let’s say you have the older version of the agent you wish to deploy in your software repository. You can access the software versions in your software library by clicking on Setup, Extensibility, Self-Update.

![extensibl1]()

Agent Software is the first in our list, so it’s already highlighted, but otherwise, click in the center of the row, where there’s no link and then click on Actions and Open to access the details on what Agent Software you have downloaded to your Software Library.

If you scroll down, considering all the versions of agent there are available, you can see that the 12.1.0.5 agent for Linux is already in the software library. If we try to deploy it from Cloud Control, we notice that no version is offered, only platform, which means the latest, 13.1.0.0.0 will be deployed, but what if we want to deploy an earlier one?

Silent Deploy of an Agent

The Enterprise Manager Command Line Interface, (EMCLI) offers us a lot more control over what we can request, so let’s try to use the agent from the command line.

Log into the CLI from the OMS host, (or another host with EMCLI installed.)

[oracle@em12 bin]$ ./emcli login -username=sysman

Enter password :

Login successful

First get the information about the agents that are stored in the software library:

[oracle@em12 bin]$ ./emcli get_supportedplatforms

Error: The command name "get_supportedplatforms" is not a recognized command.

Run the "help" command for a list of recognized commands.

You may also need to run the "sync" command to synchronize with the current OMS.

[oracle@em12 bin]$ ./emcli get_supported_platforms

-----------------------------------------------

Version = 12.1.0.5.0

Platform = Linux x86-64

-----------------------------------------------

Version = 13.1.0.0.0

Platform = Linux x86-64

-----------------------------------------------

Platforms list displayed successfully.

I already have the 13.1.0.0.0 version. I want to export the 12.1.0.5.0 to a zip file to be deployed elsewhere:

[oracle@em12 bin]$ ./emcli get_agentimage -destination=/home/oracle/125 -platform="Platform = Linux x86-64" -version=12.1.0.5.0

ERROR:You cannot retrieve an agent image lower than 13.1.0.0.0. Only retrieving an agent image of 13.1.0.0.0 or higher is supported by this command.

OK, so much for that idea!

So what have we learned here? Use this process to “export” a copy of your previous version of the agent software BEFORE upgrading Enterprise Manager to a new version.

Now, lucky for me, I have multiple EM environments and had an EM 12.1.0.5 to export the agent software from using the steps that I outlined above. I’ve SCP’d it over to the EM13c to use to deploy and will retain that copy for future endeavors, but remember, we just took care of task number one on our list.

- Show you why you should have a backup copy of previous agent software and how to do this.

Silent Deploy of Previous Agent Software

If we look in our folder, we can see our zip file:

[oracle@osclxc ~]$ ls

12.1.0.5.0_AgentCore_226.zip

p20299023_121020_Linux-x86-64.zip

20299023 p6880880_121010_Linux-x86-64.zip

I’ve already copied it over to the folder I’ll deploy from:

scp 12.1.0.5.0_AgentCore_226.zip oracle@host3.oracle.com:/home/oracle/.

Now I need to upzip it and update the entries in the response file, (agent.rsp)

OMS_HOST=OMShostname.oracle.com

EM_UPLOAD_PORT=4890 <--get this from running emctl status oms -details

AGENT_REGISTRATION_PASSWORD=<password> You can set a new one in the EMCC if you don't know this information.

AGENT_INSTANCE_HOME=/u01/app/oracle/product/agent12c

AGENT_PORT=3872

b_startAgent=true

ORACLE_HOSTNAME=host.oracle.com

s_agentHomeName=<display name for target>

Now run the shell script, including the argument to ignore the version prerequisite, along with our response file:

$./agentDeploy.sh -ignorePrereqs AGENT_BASE_DIR=/u01/app/oracle/product RESPONSE_FILE=/home/oracle/agent.rsp

The script should deploy the agent successfully, which will result in the end output from the run:

Agent Configuration completed successfully

The following configuration scripts need to be executed as the "root" user.

#!/bin/sh

#Root script to run

/u01/app/oracle/core/12.1.0.5.0/root.sh

To execute the configuration scripts:

1. Open a terminal window

2. Log in as "root"

3. Run the scripts

Agent Deployment Successful.

Check that an upload is possible and check the status:

[oracle@fs3 bin]$ ./emctl status agent

Oracle Enterprise Manager Cloud Control 12c Release 5

Copyright (c) 1996, 2015 Oracle Corporation. All rights reserved.

---------------------------------------------------------------

Agent Version : 12.1.0.5.0

OMS Version : 13.1.0.0.0

Protocol Version : 12.1.0.1.0

Agent Home : /u01/app/oracle/product/agent12c

Agent Log Directory : /u01/app/oracle/product/agent12c/sysman/log

Agent Binaries : /u01/app/oracle/product/core/12.1.0.5.0

Agent Process ID : 2698

Parent Process ID : 2630

You should see your host in your EM13c environment now.

![fs31]()

OK, that takes care of Number two task:

2. Create a documented process to restore previous images of an agent to a target host.

Using a Gold Agent Image

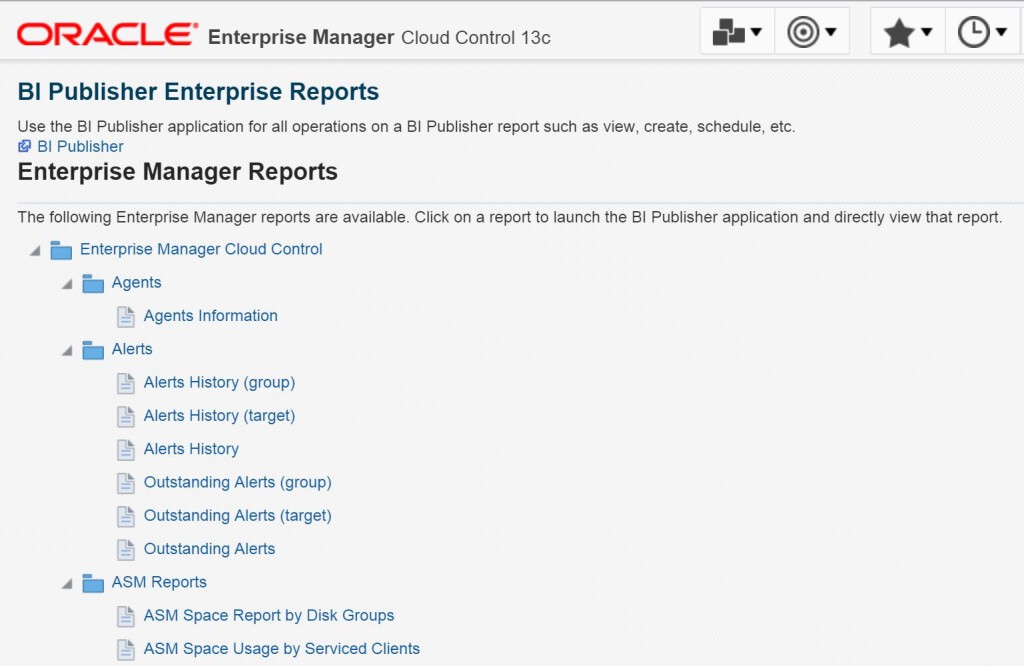

From here, we can then demonstrate the EM13c Gold Agent Image effectively. Click on Setup, Manage Cloud Control, Gold Agent Image:

Now I’ve already created a Gold Agent Image in this post. Now it’s time to Manage subscriptions, which you can see a link at the center of the page, to the right side. Click on it and then we need to subscribe hosts by clicking on “Subscribe” and add it to the list, (by using the shift or ctrl key, you can choose more than one at a time.

![gai1]()

As you can see, I’ve added all my agents to the Gold Image Agent as subscriptions and now it will go through and check the version and add it to be managed by the Gold Agent Image. This includes my new host on the 12.1.0.5.0 agent. Keep in mind that a blackout is part of this process for each of these agents for them to be added, so be aware of this step as you refresh and monitor the additions.

Once the added host(s) update to show that they’re now available for update, click on the agent you wish to update, (you can choose even one that’s already on the current version…) and click on Update, Current Version. This will use your Current version gold image that its subscribed to and deploy it via an EM job-

![agent_upd]()

The job will run for a period of time as it checks everything out, deploys the software and updates the agent, including a blackout so as not to alarm everyone as you work on this task. Once complete, the agent will be upgraded to the same release as your gold agent image you created!

![gaig]()

Well, with that step, I believe I’ve taken care of the next three items on my list! If you’d like to know more about Gold Agent Images, outside of the scenic route I took you on today, check out the Oracle documentation.

Tags: em13c, enterprise manager 13c

Tags: em13c, enterprise manager 13c

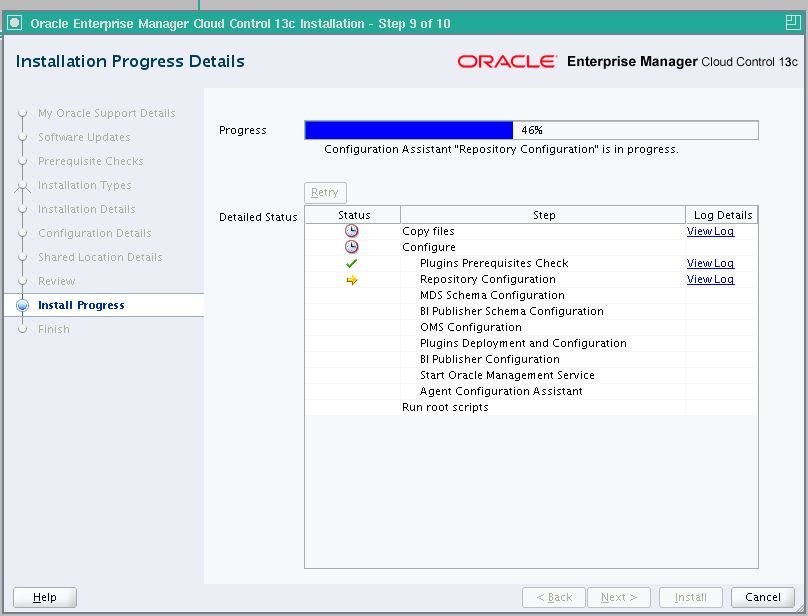

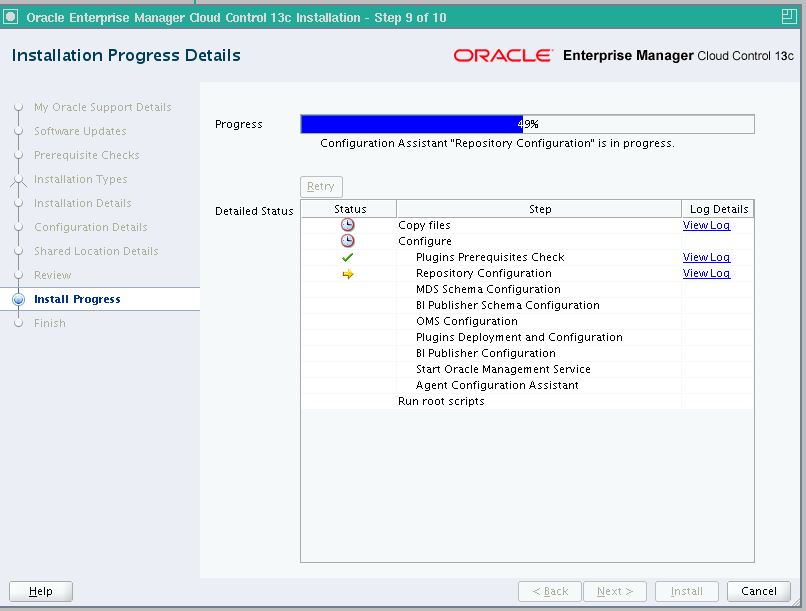

Just know that from 46% till after 50%, its takes a while to get everything compiled and configured, so don’t get frustrated and think it’s hung. Its just got a lot of work to do during this time.

Just know that from 46% till after 50%, its takes a while to get everything compiled and configured, so don’t get frustrated and think it’s hung. Its just got a lot of work to do during this time.

Tags:

Tags: